Traffic distribution with Atlassian Data Center

In this guide, we'll take you through the basics of traffic handling for Atlassian Data Center products.

This guide applies to clustered Data Center installations. Learn more about the different Data Center deployment options.

Overview

The load balancer you deploy with Data Center is a key component that allows you to manage traffic across the cluster to ensure application stability and an optimal user experience as usage increases. Load balancing serves three essential functions:

- Distributes traffic efficiently across multiple nodes

- Ensures high availability by sending traffic only to nodes that are online (requires health check monitoring)

- Facilitates adding or removing nodes based on demand

This article focuses on the first point and describes how using a load balancer for standard traffic distribution and more advanced traffic shaping helps you manage the performance of your application.

Data Center supports any software or hardware load balancer and requires "sticky sessions" to be enabled to bind a user to a single node during an entire session. This article assumes you're monitoring your application cluster.

Standard traffic distribution

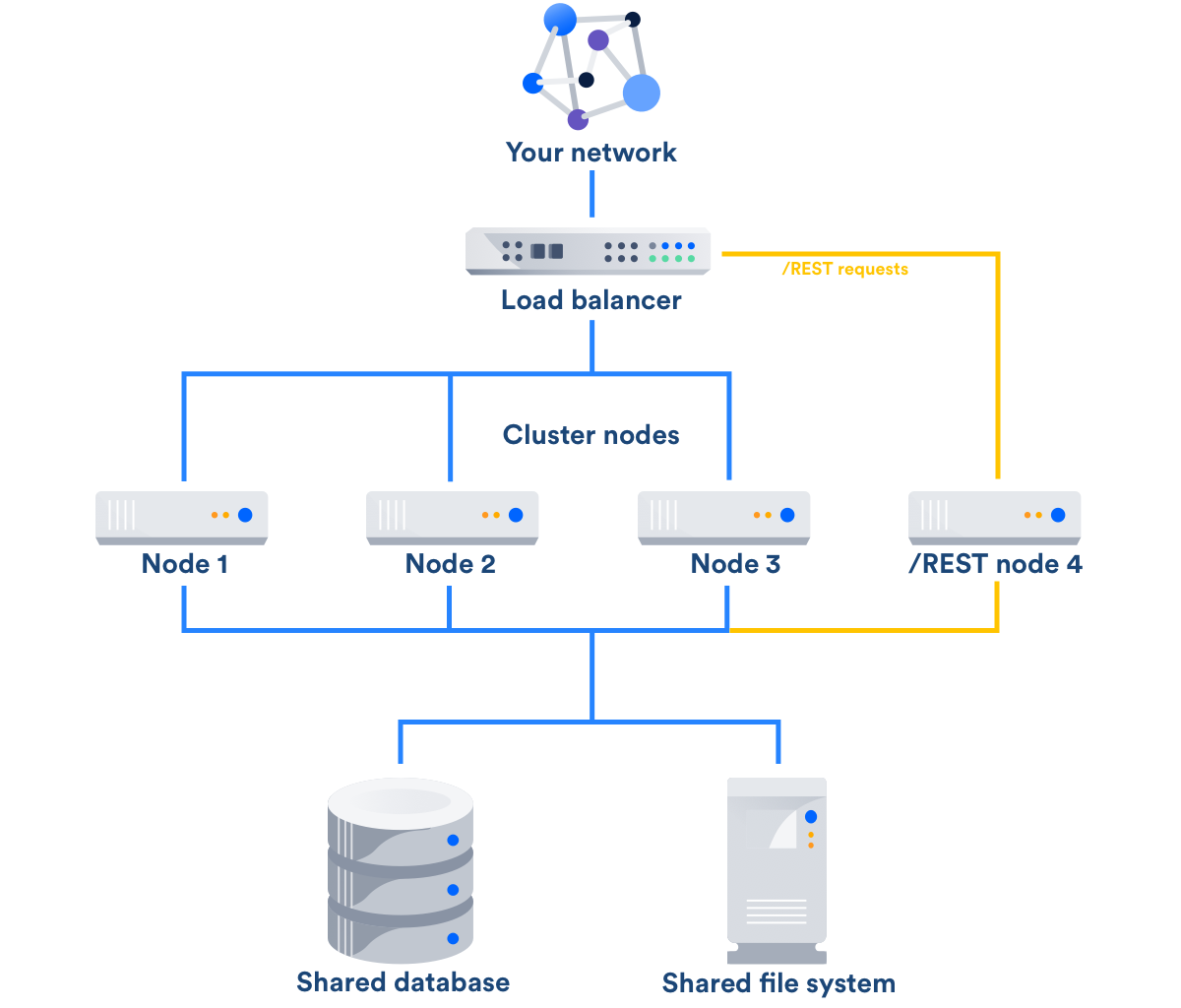

When deployed with Data Center, your application runs on a cluster configuration consisting of two or more nodes connected to a load balancer, a shared database, and a shared file system:

In a basic configuration, each application node redundantly runs a copy of the application. So if you're running Jira Software 7.3.2, each node runs the same copy of Jira Software 7.3.2. Typically, these nodes don't communicate with each other and all data is stored in the shared database and file system connected to each node. The load balancer acts as the single point of entry into the cluster.

Load balancer operation

In the basic configuration, the load balancer distributes traffic across the cluster nodes to minimize response time and avoid overload of any single node. The general process is:

- A user's client attempts to connect to the application cluster via the load balancer.

- After accepting the connection, the load balancer selects the optimal destination application node and routes the connection to it.

- The application node accepts the connection and responds to the client via the load balancer.

- The client receives the response from the application node and continues all requests and activities with that same node, via the load balancer's sticky session configuration.

The process by which the load balancer selects the target application node is dependent on the algorithm that you decide to use based on the needs of your environment. Algorithms for selecting nodes include, but aren't limited to, round-robin polling, nodes with fewer connections, and nodes with lower CPU utilization. By efficiently balancing traffic across your cluster, the load balancer increases your application's capacity for concurrent users while maintaining its stability.

As you increase the number of nodes, you increase concurrent usage capacity. As an example, our internal load testing showed a nearly linear increase in concurrent user capacity using two-node and four-node clusters compared to a single Jira Software Server with the same response time:

Setup and sticky sessions

Atlassian doesn't recommend any particular load balancer or balancing algorithm, and you're free to choose whichever software or hardware solution best fits your environment. The only configuration requirement for Data Center applications is for sticky sessions, or session affinity, to be enabled on your load balancer. It guarantees that user requests and activities are bound to the same node during a session. In the event of a node failure and failover, users may have to log in to the application again.

Load Balancer Options

Popular software load balancers include HAProxy, Apache, and Nginx.

Popular hardware load balancer vendors include F5, Citrix, Cisco, VMWare, and Brocade.

You can find product-specific suggestions regarding load balancing configurations at the end of this article.

Traffic shaping

While standard operation of a load balancer helps you increase concurrency capacity, you can use the Data Center load balancer to take even more granular control using traffic shaping. Traffic shaping allows you to categorize and prioritize particular types of traffic and redirect that traffic to a specific node in your cluster.

For example, to achieve traffic shaping in a cluster with four nodes, you could dedicate the fourth node to receive only a particular type of traffic and then configure the load balancer to send all traffic of that type exclusively to the fourth node. The load balancer would distribute the remaining traffic among the other three nodes as described above. We've seen customers shape their traffic using Data Center in various ways including compartmentalizing API calls to one node and directing traffic from particular teams to specific nodes.

REST API traffic

An increasingly popular traffic shaping technique among our customers is to direct external REST API traffic to a dedicated node or a set of nodes.

As your application usage grows, you may find that many users are building their own services using the application REST API. They may be creating computationally intensive services to accomplish necessary tasks, such as automatically retrieving reports on a periodic basis. These potentially numerous external services can degrade application responsiveness, particularly during peak times when users are performing standard operations, such as filing and updating Jira Software tickets.

You can choose to either block users from this behavior or find a way to accommodate them. In the latter scenario, it may be challenging to rely on the users to take the necessary steps to develop or redevelop their services to reference a dedicated node. Therefore, a better solution is to configure the load balancer for traffic shaping.

To take advantage of traffic shaping, you will configure the load balancer to identify all external REST API traffic and send it to a dedicated node (or a set of nodes) that only handles REST API traffic. This ensures that the other application nodes in your cluster only need to service standard user requests and aren't slowed down by other services:

While we've only currently tested this traffic shaping technique with Jira Software, it can likely be applied to any Atlassian application using REST calls such as Bitbucket.

Configuration

First, if one doesn't exist, you need to provision a dedicated node in your cluster that will handle all external REST API traffic. Nothing special needs to be done with the node as the load balancer will facilitate the shaping.

When configuring your load balancer to send REST API traffic to the dedicated node, it's important to identify only external REST API traffic. For example, Jira Software uses a lot of REST calls within itself, so identifying and redirecting all REST API traffic would impede the normal operation of the application.

Additionally, you don't want to redirect the REST API traffic that is hitting the login page. It would create a user session on the dedicated node and subsequent responses would go to other nodes where the user wouldn't have an initiated session. It's necessary to check the referrer and the requested path before redirecting REST API traffic.

Configure the load balancer to only direct REST API traffic with the following conditions:

- Referred externally from the application

- Not requesting the login page

For the four-node cluster setup, the configuration could look like this:

If referrer == app.yourdomain.com and the request ~ /rest:

Direct traffic to Node 1 or Node 2 or Node 3 //normal traffic

If referrer != app.yourdomain.com and the request ~ /rest:

If the page requested == /login.jsp:

Direct traffic to Node 1 or Node 2 or Node 3 //normal traffic

Else:

Direct to Node 4 //external REST API traffic

You may need to reboot the load balancer to apply the configuration that will shape your traffic. Test the configuration to ensure that it's working as expected.

AWS

The Elastic Load Balancer provided by Amazon Web Services uses its own traffic shaping policies, and you can't use the configuration mentioned above. To shape REST API traffic using AWS infrastructure, you'll need to run another software load balancer on AWS.

Results

One of our customers recently implemented traffic shaping in their Jira Software Data Center:

- They saw four times the amount of traffic on their REST API node than their application nodes.

- Their application nodes experienced decreased CPU utilization relative to their single instance Jira deployment.

Related links

Load balancer setup

- Confluence Data Center load balancer configuration suggestions

- Jira Data Center load balancer examples

- Jira Data Center using NGINX Plus load balancer

- Bitbucket Data Center load balancer setup

Customer stories

Other